How does Herosys M.2 SSD dual fault tolerance ensure data stability?

A seemingly insignificant data error can lead to risks of losses amounting to hundreds of thousands. In harsh industrial environments, data accuracy is not an option but a lifeline. HEROSYS, leveraging self-developed controller chips and profound firmware expertise combined with proprietary algorithms, has reduced the product’s UBER (Uncorrectable Bit Error Rate) to an ultra-low 10^(-17), forging an unbreakable shield for critical data.

Bad Blocks

To understand why SSDs require ECC (Error-Correcting Code), it is essential to first understand what bad blocks are. Bad blocks refer to memory blocks containing one or more invalid bits, which may exist from the factory or develop during usage, leading to data inaccessibility, reduced capacity, degraded performance, and eventually device failure.

NAND Flash Performance Degradation and Bit Errors

To manage bad blocks, SSDs implement ECC and NAND block mapping through programming. When bad blocks are detected, data in those blocks is transferred to free blocks, and specific error-correcting codes are executed to detect and correct bit errors, reducing invalid data. As cell sizes shrink and bits per cell increase, the following factors exacerbate performance degradation and error rates:

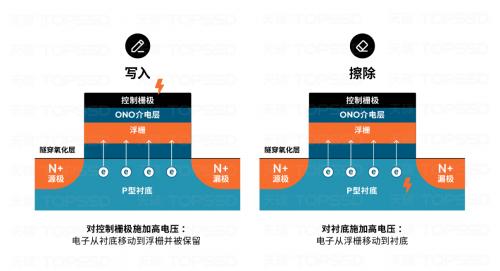

(P/E) Cycles: The constant cycles of programming and erasing require applying high voltage to NAND cells, causing stress and weakening the tunneling oxide layer. This leads to electron leakage from the floating gate and degradation of NAND flash cell performance.

More Bits Per Cell: New flash technologies increase density by storing more bits per cell, which narrows the voltage threshold distribution gaps, causes voltage shifts, and creates interference due to tighter cell packing, resulting in bit errors.

Error Detection and Correction in NAND Flash Devices

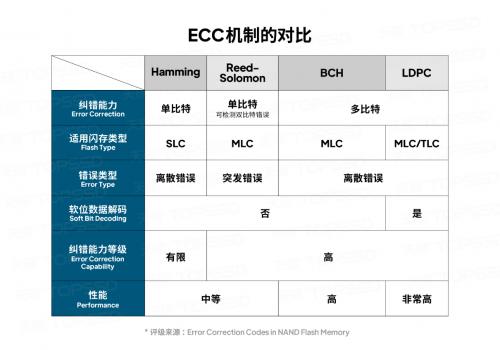

ECC is a key technology for correcting NAND errors. Unlike other products that use simple error correction algorithms.

LDPC (Low-Density Parity-Check)

LDPC codes, also known as Gallagher codes, have the ability to decode both soft and hard bit data. In Gallagher's paper, low-density parity-check codes are defined as “a linear binary block code with a parity-check matrix having very sparse distribution of 1s,” meaning that 1s are much fewer than 0s. They offer the following advantages:

1. Low decoding complexity

2. Ability to decode both hard and soft information from NAND simultaneously

3. Good short block performance

4. “Near-capacity” performance for long blocks (close to the Shannon limit)

LDPC codes represent the most advanced error correction algorithm currently available. HEROSYS’s independently developed algorithm technology further enhances this foundation, achieving lower data error rates and improved product reliability:

Error correction strength multiplied: By optimizing the parity-check matrix density and decoding algorithms, our error correction capability improves several times compared to traditional LDPC, especially demonstrating greater stability in high error rate scenarios (such as extreme temperatures and radiation environments).

Adaptation to high-density flash: For high-density architectures such as 3D NAND from Yangtze Memory Technologies, we have optimized fault tolerance against voltage threshold distribution shifts, reducing data errors caused by cell interference.